Amazon Web Services (AWS) and NVIDIA have announced an expanded partnership focused on advancing generative AI technology.

This collaboration emphasizes AWS’s role as a leading cloud provider for AI solutions, complemented by NVIDIA’s expertise in AI-driven chip technology.

The initiative aims to integrate NVIDIA and AWS capabilities to facilitate the development of generative AI applications and foundation models. Key projects include creating the fastest GPU-based AI supercomputer and accelerating AI technology development with specialized software.

AWS plans to launch the first AI supercomputer in the cloud, powered by NVIDIA’s Grace Hopper Superchip, which boasts remarkable scalability with AWS UltraCluster. At the AWS Reinvent conference in Las Vegas, AWS also unveiled its Trainium2 AI chip and the versatile Graviton4 processor.

Adam Selipsky, CEO of AWS, highlighted the 13-year collaboration with NVIDIA, noting the broad range of NVIDIA GPU solutions available on AWS for various workloads, including generative AI. NVIDIA’s Grace Hopper Superchips, combined with AWS’s networking, clustering, and virtualization technologies, aim to make AWS the optimal environment for running GPUs.

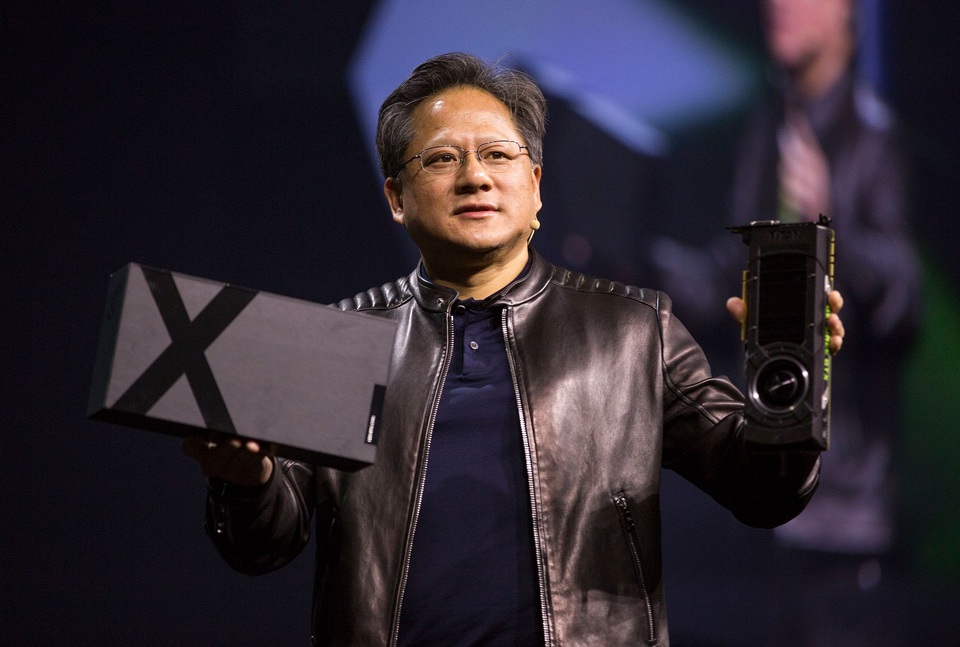

Jensen Huang, NVIDIA’s CEO, emphasized generative AI’s role in transforming cloud workloads and the importance of accelerated computing in content generation. The joint mission of NVIDIA and AWS is to provide cost-effective, top-tier generative AI to all customers, covering AI infrastructure, acceleration libraries, foundation models, and services.

The collaboration includes several specific projects:

- AWS will introduce NVIDIA GH200 Grace Hopper Superchips with NVLink technology to the cloud. This platform, featuring 32 connected Grace Hopper Superchips, will be available on Amazon EC2 instances, offering powerful networking, advanced virtualization, and hyper-scale clustering capabilities.

- NVIDIA and AWS will jointly host NVIDIA DGX Cloud, an AI-training-as-a-service platform on AWS, featuring GH200 NVL32. This service will facilitate the training of large language models and generative AI, supporting models with over 1 trillion parameters.

- Project Ceiba, a joint venture, aims to design the world’s fastest GPU-powered AI supercomputer. Hosted by AWS, this system will feature 16,384 NVIDIA GH200 Superchips and offer an AI processing capability of 65 exaflops. NVIDIA will use this supercomputer to drive generative AI innovation.

- AWS will launch three new Amazon EC2 instances: P5e, powered by NVIDIA H200 GPUs; and G6 and G6e, powered by NVIDIA L4 and L40S GPUs, respectively. These instances will support various applications, including AI fine-tuning, graphics, and video workloads. The G6e instances are particularly suited for developing 3D workflows and digital twins using NVIDIA Omniverse.

Additionally, NVIDIA announced AWS-based software to enhance generative AI development. This includes the NVIDIA NeMo Retriever microservice for chatbots and summarization tools, and NVIDIA BioNeMo for pharmaceutical research, available on Amazon SageMaker and planned for AWS on NVIDIA DGX Cloud. AWS is utilizing NVIDIA’s software for its services and operations, including training Amazon Titan LLMs with the NVIDIA NeMo framework and using NVIDIA Omniverse Isaac for digital twin development in Amazon Robotics.

News source: NVIDIA