TKT1957 at IBC 2023: Interview with David Ross, CEO of Ross Video.

- What are the main trends of IBC 2023, in your opinion?

From what I’ve observed at our stand, it’s been exceptionally busy. It’s quite significant because this is the first time we’ve displayed equipment at our booth since 2019. We’ve missed two IBCs, and now we’re returning to our usual presence. In terms of trends, especially when compared to 2019, which was the last IBC we attended, the world has evolved. There’s noticeably more discussion about the cloud and somewhat less about 2110, yet SDI remains a topic of interest. It’s a fascinating shift. We’re also discussing NDI. The manner in which video equipment is connected seems to be in constant flux. It’s less about a specific trend and more about a world fragmented by diverse ideas, such as whether to focus on current trends, software, or hardware. Essentially, the prevailing trend is the absence of a singular trend.

David Ross, Ross Videо: Not many fathers tell you not to go back to school

- What are the primary developmental directions characteristic of your industry segment?

I’d argue that our industry segment primarily revolves around content creation and production. We’re witnessing the rise of REMI production for sports. This involves having cameras on-site and using the internet to relay videos back to a control room for production. We’re also introducing something we term “Distro”—a Ross Video terminology. This concept extends beyond merely returning to a centralized control room; it encompasses individuals working from their homes, reminiscent of the COVID era. An intriguing aspect of remote production is the logistics. Even if you’re transporting staff to the production site and then coordinating with a remote control room, you still incur expenses for their travel, accommodation, meals, and so on. While this setup might enable more productions in a single day, the travel requirements persist. However, the current trend leans towards professionals operating from their homes, eliminating costs like meals since they can cater to themselves. Essentially, the only expense is their time.

- For the longest time, latency has been the bane of everybody’s existence. Have we solved the latency issue or the perception of latency in remote production?

That’s a good question. It really depends on what you’re referring to. When you mention a cloud-based graphite product, which includes the production switcher and audio mixer that all run in the cloud, they claim that the delay between making the switch and seeing it on screen is only 200 milliseconds. It’s not the same as when there was just one or two green blades where you could sit at the cross point and see it work instantly. I wouldn’t recommend doing music production remotely on the cloud. If you opt for the avenue, you’ll end up paying a premium for transmission, especially when you’re using HD64 and going multi-hawk through the cloud.

Suddenly, you’re dealing with one to two and a half seconds of delay. It hasn’t gotten faster, so the solution has been to adjust the production method. The camera operator has to focus on the subject for an extra two or three seconds before making a move because the action will be broadcasted later. You also have to give your talent a countdown to ensure they know when the broadcast is actually starting. The delay is managed by adjusting the production itself. Personally, I miss the good old days where, with an analog signal, everything was almost perfectly in sync.

- Yes, that’s interesting. In the past, timing had to be incredibly accurate because of color. As technology advanced, timing became less precise. Now, with the introduction of PTP, timing has become even more lax, yet people are still concerned about latency.

I once had a conversation with a software developer, whose name I won’t mention, about the value of asynchronous video and how there’s no longer a need for precise timing. I found that intriguing because when you design a television facility, the first step is usually to synchronize everything going into the router. This ensures that when you switch, everything operates seamlessly. The workaround for asynchronous video involves using frame syncs and video delays. I find it amusing that with cloud technology, audio and video don’t always align.

The more you encode, frame sync, and drop frames, the more out of sync the audio and video become. I anticipate that in a couple of years, those who once praised the value of asynchronous video will be discussing the challenges of lip sync, production delays, and video glitches. It seems we might come full circle on this issue.

- Would you say that we’ve come to a point where we accept the delay?

There’s a certain level of acceptance regarding the delay, and it’s challenging. I’m sure that if someone could develop a solution for H264 remote transmission and cloud production with minimal delay, it would be a game-changer. However, we haven’t reached that point yet.

- What new products is your company showcasing at IBC 2023?

We’re introducing extended reality production solutions. Extended reality is akin to virtual production, where elements are placed either in front of or behind the talent. Previously, we achieved this using green screens. Now, we’re implementing a Ross LED screen behind the talent. This eliminates issues like edges and shadows, and even allows the talent to wear green in front of the screen. Leveraging the Unreal Engine, when a camera captures a scene in the background, it can peer into the 3D world. As the camera moves, the on-screen view adjusts accordingly, providing a realistic perspective from that camera position.

- Are you utilizing your own camera tracking system or a third-party solution?

It varies. Currently, at the show, we’re demonstrating two camera types. The first is Furio: a dolly-based camera that moves along a track and knows its precise location in 3D space, eliminating the need for additional tracking. The second is Jib, which is known for its challenges with sag and wobble. Even if you believe you know the Jib’s position, the camera’s exact location remains uncertain. In such cases, we resort to third-party tracking solutions, and we’re compatible with all major systems. We integrate both camera systems into our engine and manage them using our Lucid controller.

When operating in an environment that uses the Epic Games engine as its core, as our Voyager system does, it’s essential to remember that it wasn’t designed for broadcasting. Epic Games doesn’t cater to Moss, real-time requirements, or the unpredictability of live production, including data integration. That’s where our Lucid system comes into play: it serves as a control panel for the 3D world.

- After the first NAB post-pandemic, every booth had an LCD wall. I expected to see AI in every booth the following year, but that wasn’t the case. Do you think extended reality will gain traction in news production?

There’s a clear advantage to using XR with LED over a green screen. The main benefit is that the talent can interact directly with what’s displayed on the screen. For segments like weather forecasts or presentations, the presenter often has to glance at a monitor, which might not be aligned with the camera’s position.

- Indeed. Do you envision production sets evolving frequently, incorporating minimal practical elements to enhance value?

That’s an intriguing observation. We’ve noticed a trend where entities invest in LED walls, tailoring them to fit practical set requirements. However, this isn’t a budget-friendly option. An LED wall can set you back anywhere from $200,000 to $500,000, depending on its size. Some producers still favor green screens as a cost-saving measure, despite the challenges they present, such as flickering shadows, inconsistencies along the edges, and the talent having to estimate the placement of post-production effects. With LED, the visuals are consistent and high-quality right from the start. While chroma keying might be more affordable upfront, there are other costs to consider in terms of production quality.

- Are there any advancements in incorporating AI into your offerings?

Certainly. We’re showcasing our MAM product, Streamline Pro, for the first time here at the exhibition. It’s a fusion of the product we acquired from Primestream, our existing MAM Streamline, and a slew of new technologies, complete with a revamped user interface. This product seamlessly integrates with our Inception news system and graphics suite to deliver a comprehensive solution. Within this system, we utilize AI for tasks like metadata generation, data tagging, and even video editing.

- Is the AI proprietary, or is it sourced externally?

For now, we’re leveraging external AI. Ultimately, we often rely on external AI solutions, building upon the groundwork laid by others. However, our unique implementation is what sets us apart. That being said, this isn’t our sole AI venture. Within our camera motion systems, we’ve developed Vision[Ai]ry, which utilizes our proprietary AI for facial, head, and body tracking, enabling cameras to maintain focus on specific talent. Now in its second year, Vision[Ai]ry has garnered significant acclaim. Feedback suggests it’s currently the industry’s premier AI-driven camera tracking solution—a testament to the substantial investment we’ve made in its development. One challenge we’ve encountered is the system’s occasional inability to recognize talent following sudden movements. Additionally, when multiple individuals are present on set, the camera might struggle to focus on its primary subject, especially if others walk in front. Further complexities arise when programming the AI to guide camera robotics, dictating zoom speeds, rotation timings, and movement patterns. There’s a myriad of intricacies associated with this type of AI. At this show, we’re pushing the envelope further, exploring how to efficiently manage multiple cameras in real-time, especially when they’re all active simultaneously. The key lies in crafting an intuitive user interface.

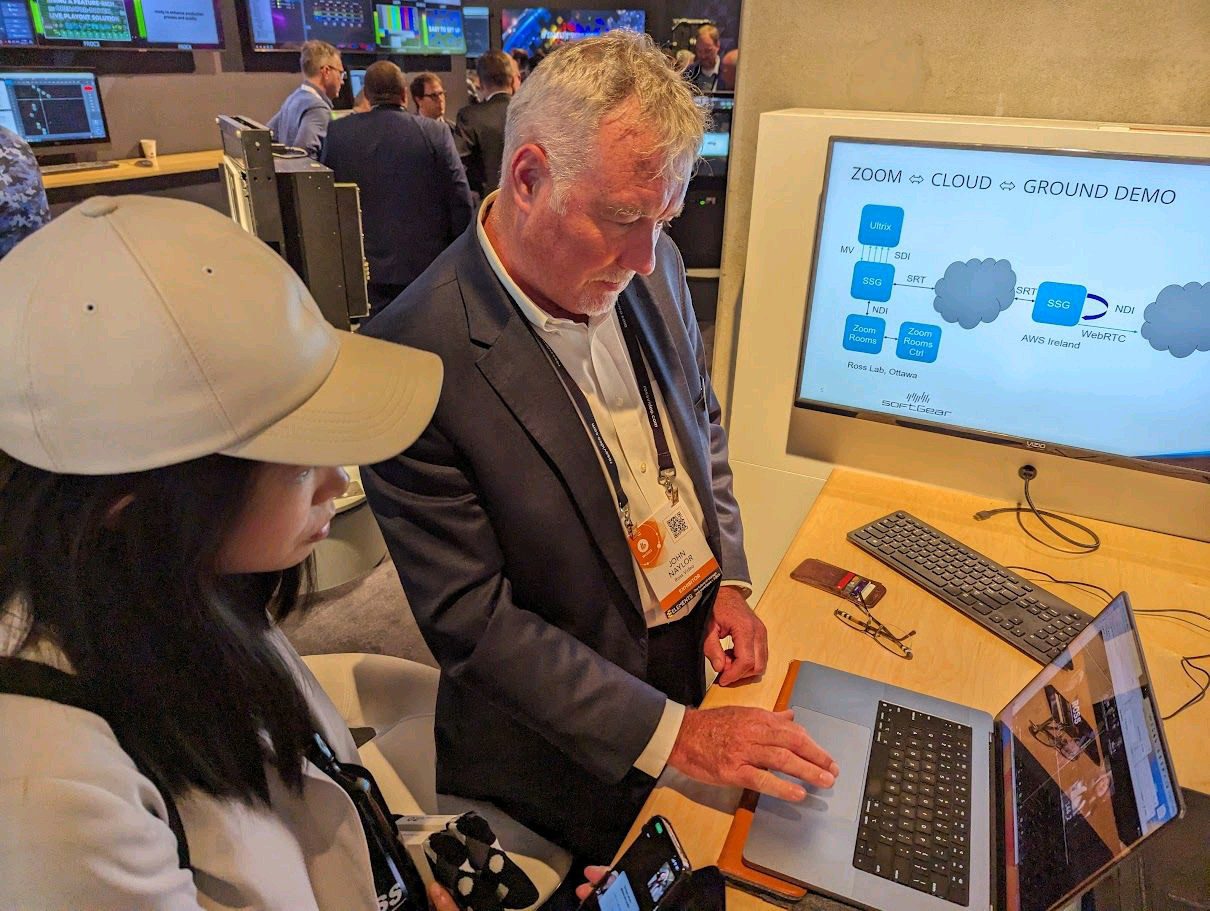

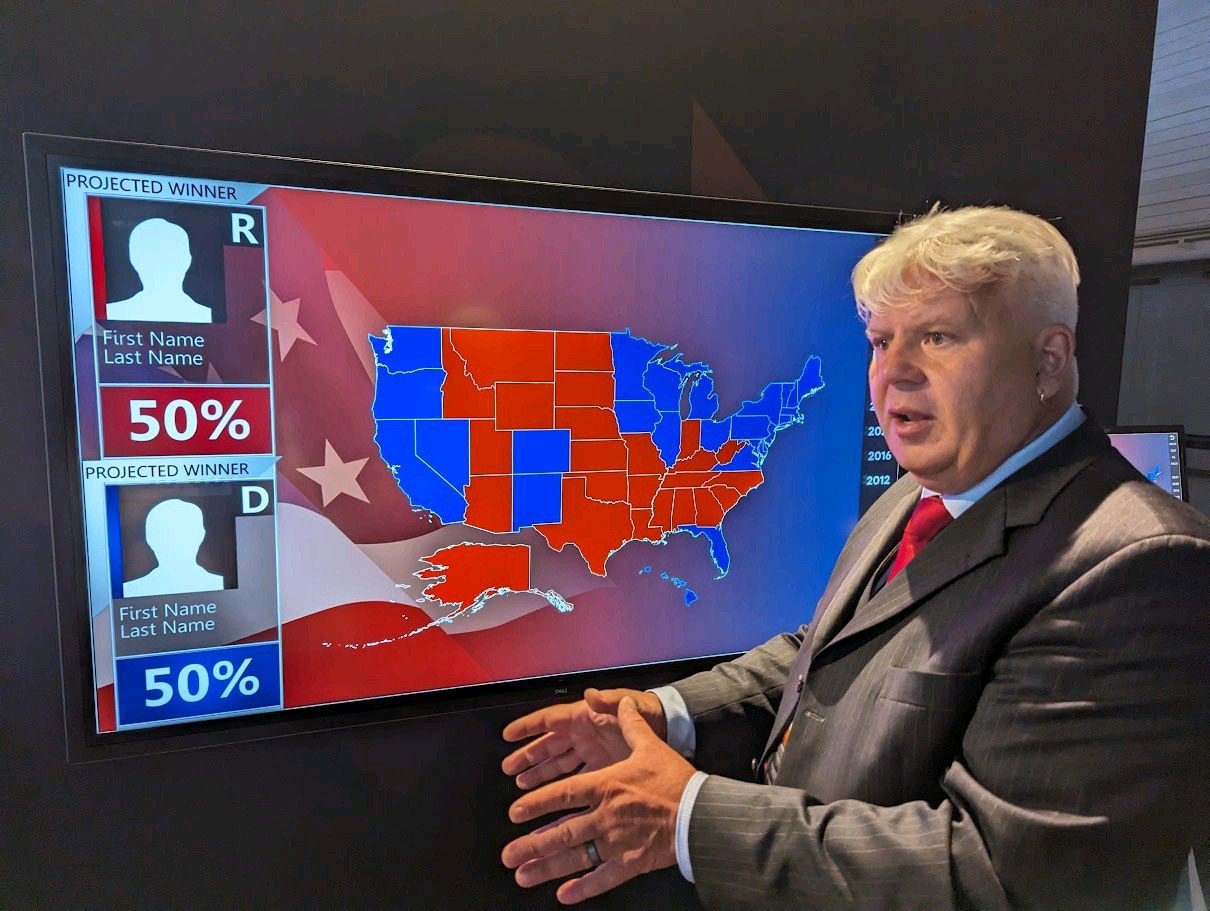

Images provided – Ross Video