Interview with James Uren, Technical Director at Mo-Sys Engineering Ltd.

-

To begin with, how was IBC for you? What do you see as the main trends of IBC 2023?

IBC was truly exhilarating, especially from a technical perspective. It was bustling. For us at Mo-Sys, it’s always a platform to showcase our latest innovations, predominantly in the realm of virtual production where we’re currently active. We began our journey with camera robotics about 25 years ago and then transitioned into camera tracking, with Star Tracker being one of our flagship products. Now in its fourth generation, we’ve introduced the Star Tracker Max, a more compact yet powerful camera tracking system. Our focus has expanded to include comprehensive virtual production solutions, encompassing both software and hardware that extend beyond mere camera tracking. IBC was an ideal venue for us to present these broader solutions.

-

Have you incorporated AI into your camera tracking?

As of now, machine learning isn’t directly applicable to that technology. However, I do see potential applications in the future. At IBC, we did showcase some machine learning and AI applications, which were indeed trending. In the broader tech landscape, AI is being employed in numerous contexts. It’s essential to approach machine learning and AI judiciously, ensuring it’s applied where most appropriate. It’s not a one-size-fits-all solution. In fact, a significant new product we unveiled at IBC leveraged machine learning. It’s a component of our VP Pro XR software suite.

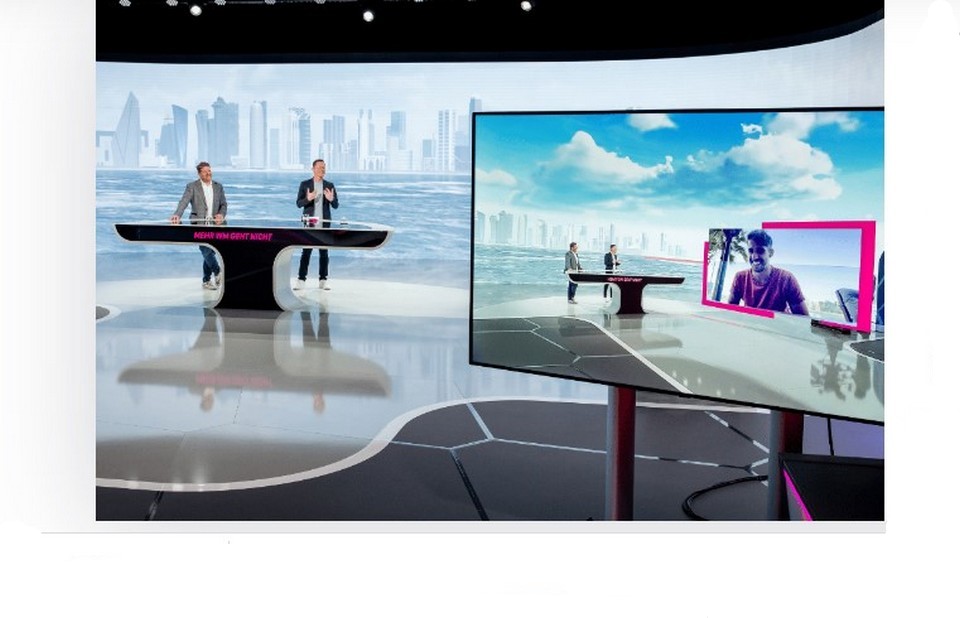

VP Pro operates natively within the Unreal Engine, facilitating its use for virtual production. While Unreal Engine, being a game engine, excels at rendering, it lacks certain tools for immediate on-set or studio use. For roughly seven years, we’ve been developing VP Pro to bridge this gap, enabling users to harness the Unreal Engine natively for virtual production. Naturally, it integrates with our tracking systems, offers compositing capabilities, records data, and provides a toolkit for visual effects workflows. Additionally, it features a suite of tools tailored for LED, termed VP Pro XR. This suite facilitates set extensions and cinematic XR focus, allowing for seamless focus transitions between tangible objects and those rendered within the LED environment. It also offers an array of tools for set extensions, ensuring a comprehensive 360° environment around the camera, beyond just the LED backdrop behind the subject.

What we unveiled at IBC was our major release. This innovation was conceptualized by our CEO at the previous year’s IBC in 2022. Within a span of 12 months, we transformed that concept into a full-fledged software product, which is currently being integrated by several of our clients.

The primary objective of our release is to facilitate the use of multiple cameras in front of an LED wall. There are various methods to achieve this, such as frame switching or frame interleaving. A specific variant of this is the “Ghost frame”, which allows multiple cameras to capture the same LED wall while the wall alternates its display between the cameras. However, this approach has its challenges. Primarily, it demands significantly more rendering power, multiplying the rendering requirements by the number of cameras in use. Moreover, in many scenarios, the audience’s focus remains on a single camera.

Our solution, MultiView XR, addresses these challenges. It switches between cameras, compensating for any inherent delays in the system, ensuring a seamless transition between camera views. Furthermore, MultiView XR employs AI to offer directors a multi-camera preview.

-

Can the MultiView XR be utilized in a live broadcast, or is there a need for post-broadcast edits?

It’s designed for immediate use. The director’s preview component is specifically tailored for the director in the gallery, so it’s not broadcasted. What the audience views is the live camera feed with the appropriate background. Essentially, it’s a cost-saving technique for employing multiple cameras in a live broadcast setting.

-

I’ve interacted with several companies, and I recall one offering a similar solution. Is this a shared technology with varied branding, or does Mo-Sys offer something distinct that competitors haven’t yet achieved?

Our offering is genuinely innovative. We’ve developed and patented several techniques, ranging from our tracking system to our cinematic XR focus. In fact, the cinematic XR focus is a personal invention of mine, which we’ve patented. It integrates with focus control motors to enhance the focus experience in LED volumes. MultiView XR is exclusive to Mo-Sys. We’re pioneers in providing the switch director preview, enabling directors to view the correct perspective from every camera angle.

-

Which upcoming exhibition will Mo-Sys be attending? Where can clients and potential partners meet you next?

Our next significant appearance will be at ISE in Barcelona come February. We’re gearing up to showcase numerous innovative technologies alongside various partners at the ISE event. Stay updated by checking our website, news channels, and social media platforms for precise details on our location. Like at IBC, we’ll be collaborating with several partners at ISE, so you’ll find us at multiple booths throughout the event.