Welcome to TKT Visionaries, our monthly Roundtable where we bring together Visionaries who are leading the industry with the latest technology and services on some of the most amazing things I’ve ever seen in the industry.

Welcome to TKT Visionaries, our monthly Roundtable where we bring together Visionaries who are leading the industry with the latest technology and services on some of the most amazing things I’ve ever seen in the industry.

Today we have our special guest talking about XR and VR:

Justin Wiley: He’s a Technical Director of Arc Studios and has over a decade of film industry experience under his belt both as a cinematographer and producer and brings a wealth of knowledge to the role as a Technical Director for Gear Seven, Shift Dynamics, and Arc Studios. He oversees the procurement and implementation of cutting-edge cinematography tools and applications that Gear Seven and its partner brands utilize to deliver state-of-the-art results in video production.

Addy Ghani: He’s the VP of Virtual Production at disguise. Addy is immersed in the Studio workflows and Industry standards for real-time visualization for film and television. Addy is building a disguise creator community and strategies that will further the proliferation of Virtual Production. Prior to joining disguise Addy designed and built world-class stages for DreamWorks Verizon Media and NBC Universal to help creatives visualize their dreams. He’s an unofficial Unreal Engine Evangelist and Fellow.

Philip: I’d like to welcome you all to the show today and thank you for being my guest. We also have Anatoly, he’ll be telling us a little bit about his background real quick.

Anatoly Goronesco: I worked for the Olympic Games, several games actually, like the Tokyo Olympics where I oversaw the Immersive Technologies and Licensing between the organizing committee, Olympic broadcast services, and Intel which was our supplier at that time.

Philip: Fantastic! I’d like to start my first question with you Addie. What is the difference between XR and VR? You hear those terms utilized a lot but how do you explain to the layman?

Addy: XR is extended reality and VR, we like to call it VP, virtual production. Generally, those two things can fall under the same umbrella and it could be confusing but the way we at disguise differentiate it is that XR typically uses mixed reality elements like augmented reality foreground, set extensions, background inclusions, and things like that whereas VP is strictly for a technique called in-camera visual effects. So it’s the art of eliminating green screens and adding in a real-time environment. VP is generally reserved for film TV scripted content whereas XR is for Live Events musical concerts and really more spectacular real-time events.

Philip: Fantastic! Justin, anything to add to that? Do you see it a little bit differently in your world?

Justin: No, not at all. I definitely feel like we’re just getting to the point in the industry where terms are kind of landing and we’re all like okay, yeah we’re gonna call this… that name, we’re going to call this… this name, and it’s kind of been helpful as we’ve all just been figuring all this out over the last couple of years say. It’s actually quite helpful because we understand that we basically use a lot of the same tools to power all these different events or filmmaking tools but the difference is the end product derived from using those tools differently in slightly different scenarios.

Philip: Alright. Anatoly, do you have anything to add to that? I mean from an (I guess) European perspective. Do you guys separate out XR/VR in the same way?

Anatoly: Yeah, definitely we do. I would like to explain using the concept of New Media. These two words describe way more than XR, VR, and AR. It’s probably difficult to understand to a wide audience all around the world because terms like let’s say New Media might be the next word to describe what we’re talking about. And I would like to add a very important concept which is that regular broadcast is migrating and maybe evolving to a New Media where the internet and interaction like conferences will be the new language to communicate to your audience. It’s all about interactive experience when you can touch some objects whether they be virtual or mixed reality or whatever, but these interactions are very useful and can describe way more. And currently, we’re working on a web-free concept where you don’t need to install any additional applications and so we’re working on a browser with one click which would help to lower the entry level for lots of people to try. Otherwise, we’ll need lots of adjustments and so on. That’s my let’s say ideas.

Philip: Justin, from that perspective, what technology do you think “is missing” or what would you like to see added? You’ve been involved in creating these types of productions. Have you ever imagined a scenario where there’s this one piece or if the technology did just a little bit better I could do more. What is that piece? I’m sure that like all of us, there’s a giant list but is there something you can sort of narrow down?

Justin: I think there is. As we’re exploring a lot of these new tools that we have or at least are finding new ways of doing the old things. We’re kind of trying a lot of these new things saying okay, well, this is incredible but once we kind of get this part figured out like color, pipeline, and workflows to where it’s just super clean, when the image that you see on your laptop when creating a scene in unreal matches what the camera should see at the end of the pipeline, the tools are just going to be even easier to use as we get those things more ironed out. So a lot of these tools are still in the R and D phase and so we’re kind of stress testing. I’ve been figuring it out and sometimes we’ll be like “Okay, that got us like 99 percent there.” But I’ve always thought there are a lot of Realms where it’s just going to get easier and easier to do these things. From a creative filmmaker’s point of view, it gets even easier for me to be like well if I’m writing a story and I’m going to set it on top of the mountain somewhere even though I might not have the budget to send a crew up the side of a mountain.

Philip: Addy, maybe to help the people understand, what does disguise do? and how do you fulfill the needs of people like Justin who are building these productions or other companies?

Addy: Absolutely! At disguise, we make a technology that powers all of the virtual production or XR stages around the world that we support. We make these incredibly high-performance servers and we make very custom software that runs on those servers. We then make the tech integrations like plugins that work with pretty much any major technology players in this space so at disguise, we not only make the technology, we also help train you on that technology. We support you throughout that process and now with Meptik, we’re able to offer you a service to build stages or perhaps build the content that you’re gonna need after you build that stage. So we do it all.

Philip: Anatoly, you obviously have done a lot of this and I’m assuming some other sports-related business. Where do you see the technology driving the type of work that you do, especially in Europe?

Anatoly: I see great potential around artificial intelligence production based on AI both directing and replacing cameramen totally with AI Machine Vision tools which allow you to use panoramic cameras. At the same time, you have an AI director who can pan and tilt the image inside the panorama. We are operating the concept of points of presence at the event so you can distribute some mini cams for virtual reality with full spherical images and then you can create the virtual twin of the venue in mixed reality. For example, to create 3D scenes with these points of presence like spots where you can send your customers to see the content from particular spots without any general director or somebody who decides what’s interesting and what’s not. You just get the feeling of full-fledged presence at the event like behind the goals, in the changing rooms, or the arrival of the teams and any kind of motions.

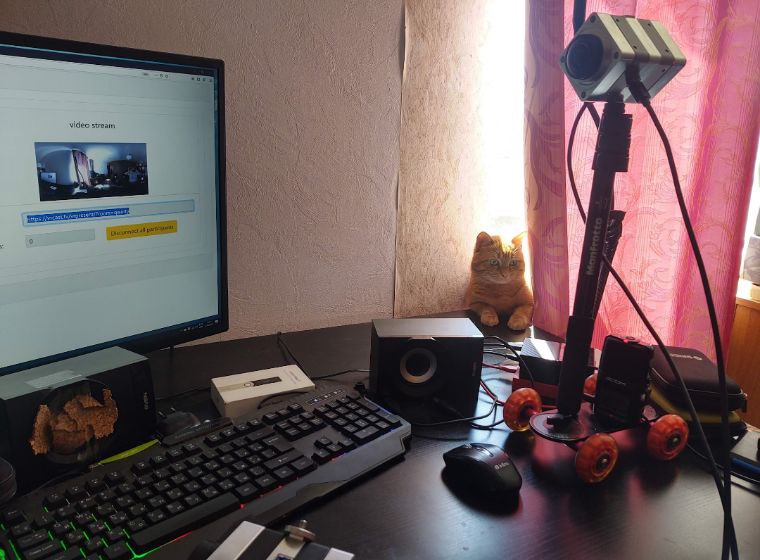

If you allow me to share my screen, I can show you what I’m talking about because I have the setup next to me right here in the office. And if you would like to see how it works.

How it works is that we’re just joining the room and at the same time 4K live streaming and it’s been working in the browser platform realization and our idea is to distribute these around maybe multiple venues in multiple countries and we can offer our customers outstanding experience when they can be in the Real Time.

Philip: Okay. Addy, with disguise, from my understanding you’re a little agnostic in terms of some of the components because you’re really that glue that holds it together. What are the main manufacturers around that camera tracking and the different types of technologies that are used around that component?

Addy: Sure. So there are basically two different families of camera tracking technology: outside-in tracking and Inside-out tracking. Outside-in uses fixed cameras around your stage to then track a moving object and inside-out is quite the opposite.

So you have a camera mounted on the camera system that is looking up at six markers. For the outside in, the common motion capture World Optical systems like Vicon and Optitrack are very common because they are able to track human bodies they can definitely track a camera much more easily. On the other side of it, the N-cam style is the two big technologies in this space. Of course, you have Mo-sys, disguise supports all of them. We even go as far as to support HTC Vive and things that are on the consumer level.

Philip: I did see a post recently since AI is the big thing that everybody’s talking about. I saw recently a company talking about being able to replace mocap suits with AI. Do you see AI coming into that camera tracking world more or is it still too complex?

Addy: No. I think that at some point, AI systems will be able to track cameras. So the system that you are talking about is move.ai which is a technology we just integrated with disguise and we actually announced it at NAB. It has the ability to have anybody on set on an LED volume be tracked without the traditional markers and the suits and the cameras that mocap suits have.

Philip: Okay. Justin, so you hear the term Unreal Engine, I believe there’s another system for generating backgrounds as well. When you’re doing a production, is there one that you prefer over the other? or one that gives you more reels and more capabilities? how do you go about planning development for doing production in terms of what’s going to drive that background?

Justin: I think it’s all about use cases and figuring out what the scope of the project is. Unreal is incredibly capable and incredibly complex, you can use it to generate full 3d environments, 2D-based renders, and outputs. A lot of times we’ll kind of stage things into a few different categories, one will be like 2D plates so you either have video playback or still photos up there and that’s just the background you want to use. It could be as simple as taking a photo of your backyard and putting it up there and using it all the way to fully customized 3D environments like you would create for a video game that will be able to move the camera around and customize onset. Anyway, we’re finding that a lot of that work is happening in unreal and one of the other tools is Unity. It’s another system that’s used to create video games and so you can create these highly detailed but also efficient environments and sets that you can build to shoot in the same way a traditional carpentry team would build a set in a studio. You just have infinite flexibility if you have the time to build up which is what makes a lot of this so great. But we have loads of clients and loads of projects that we work on where we’ll say “Well, we don’t really need all of that, we really just need a background play of the kitchen.” And we can find different ways to use either a video plate or even still images to achieve the same goal.

Philip: Anatoly, are you finding the same thing in the work that you’re doing in terms of how you predict or project what would be the driving force whether it’s Unreal or Unity? Do you see Unity in the European market as well?

Anatoly: Certainly. We see that Unreal Engine is more about video realistic rendering. They are focused on metahumans, they would like to create artificial people. And this technology is really mind-blowing in terms of working together with New Media. Unity is more like an entry-level which is easier to start. However, we are thinking more about mixed reality and the concept of web browsing and I do believe in the pixel streaming which Unreal Engine is offering right now. We are waiting for a major update from Google which will enable us to see the important milestone of the Support web GPU rendering which allows you to create 3D scenes in a browser. This is likely to be 10 times more powerful when compared to the current solutions and we can create way more immersive experiences. And if we look back to the meta, we’ll see that they are enforcing a new division, they’ve stopped talking about the metaverse, they have started to talk about immersive games. We do believe that web browsing, web-free technology with NFT crypto, and combining 3D worlds together, this kind of metaverse is created in the browsering versions and this will be the big future. I’ve got a request, can I add some screen sharing about our experience with AI and with points of presence?

Philip: Addy, as disguise is building out its software to integrate and simplify the process, are you seeing more corporate customers coming on board or asking for systems?

Addy: Yeah. 100 percent. We actually just introduced a product server that is specifically geared for this market called the EX3, so it’s priced to be much more affordable and you still get the same benefits of disguise that come from a feature film or high-end broadcast but now we’re just bringing that reliability to that space.

disguise and Move.ai’s Real-Time Markerless Motion Capture Solution is Now Available

Philip: Anatoly, Are you doing more corporate sales or it’s still sort of a larger production in Europe?

Anatoly: Yeah. I think so. We see the interest coming from both sides. From b2c clients who are bloggers and influencers interested in a new language to talk to the audience to brands searching for new methods of communications. Though, I think there are more corporate interests here. Also, small companies are more flexible to implement some innovations.

Philip: I’ll throw this to Justin. What do you think is holding back smaller customers from getting into this and utilizing XR and VR?

Justin: I think that as a technology, it’s getting a lot better and becoming way more accessible to a lot of people. There’s a level where you can do a lot of this even on your phone or with your TV. You can do something as simple as putting up content on your TV and filming on your phone to utilizing some green screens and compositing on your laptop with Unreal Engine. There are a lot of very simple, accessible ways to do it. I’m loving seeing a lot of YouTubers being like “Just let me get in and figure this out to see if I can actually pull this off” which is really cool. A lot of the clients we have will be like “Hey, I have this really cool idea for a music video and I don’t have a hundred thousand dollars, is there a way that we can make this work or maybe try a new thing and find some way on the production side?” And we’re like “this could be a super compelling use case, we can test out this new ID or piece of gear by finding different ways to try it to do new ideas and new things.” That has been really valuable for us and to try to find ways to also educate a lot of people on how this whole thing works, how it’s different from traditional products but also in a lot of ways how it’s not. And so, the more educated people get, the more they are less likely to see it as a big scary technological hurdle that’s just gonna end up being a nightmare. We’re finding ways to help people understand the tech, how it works, and that it isn’t only a Star Wars/Star Trek type of thing but it is something that is accessible to way more people than they think.

Philip: Excellent! Anatoly, I think you have some samples that you want to be able to show and maybe talk a little about what your customers have done or what you’ve done for your customers.

Anatoly: Yeah. I think it will be really useful to see what we have done previously. Here we go. We did this use case in Pittsburgh last year in May. Here’s the Pittsburgh Arena and this is just a 360 photo for the home screen at the same time, we have a menu button, and the whole works just in a browser, on the web page. We can teleport in between different locations and this is the first one. This is point number one at the media there on the seventh floor and people would like to teleport to spot number two which is pretty much like teleporting with our vision when using a VR headset. We have a pointer so it’s more than enough just to see at the red spot right here. And we’re making this production totally remote, we can zoom in and out.

Philip: Interesting. This brings up a question for Addy with the VR and XR studios. Are you seeing more adoption or more push for 3D VR and productions that support VR headsets?

Addy: Yeah. So I come from the VR/AR world and now coming into virtual production and XR, I will definitely have to say that those two things are now two different things. I’m not seeing stereoscopic use of VP stages at the moment.

Philip: Is there a technical challenge when producing the frustum… I think it’s where you have a clear image. Doing that in the VR-type world would require I guess the entire stage.

Addy: You would need to essentially render two cameras at the same time with the correct interocular distance and you would have to use technology like GhostFrame for example to capture two perspectives at the same time. It is possible and I’m sure somebody is doing it but it’s not something that’s mainstream.

Philip: Okay. And Anatoly, you’re showing… I think that was the EFC.

Anatoly: EFC at Miami Stadium a fighting championship. We did it recently last March in Miami. And yeah, I would like to add something about 3D stereoscopic images. As per my experience, 95 percent of people have this [shows a device] and that’s why we’re focused on monoscopic 360 images because the fashion for this 3D 180 is designed especially for VR goggles, and most of the people I met in the US recently last year while traveling have a thick layer of dust on their oculus because it’s too complicated for an average person to maintain the oculus from constant updates to registering to a virtual keyboard. It takes a lot of effort to operate and that’s why we focused on web browsers, monoscopic 360 images, where you can pan and tilt and zoom in and zoom out. I was just showing you the NHL and fighting case, and I would like to show you another one which is all about artificial intelligence. Here we go.

What we are actually doing is that our algorithm allows us to watch the game without watching the puck. Basically, we are reading the context of the game and analyzing the players like skeletons, this way we can predict where the game is going. Our product is the 2D screen on the top. The end user never ever uses VR because we are using panoramic images just for AI production.

Philip: Interesting. That brings up an interesting question which is the 800-pound gorilla in the room. We’ve all heard of Apple producing some type of augmented or virtual reality goggle glasses. Justin, do you see that impacting or driving anything that you may be doing in the future… if all of a sudden everybody’s got AR/VR glasses?

Justin: I think one of the most compelling use cases like as a filmmaker and we can even do this now with headsets is that in pre-production and planning, when going on a location scout to find a street field, everyone would pile in a van and we’d all go there together. Now, we can scout locations that are built in Unreal in a headset, I could be in Nashville and it can be in Atlanta and he could be in LA and we could all jump on the same session, put our headsets on, walk around the same environment, we could point out “hey, let’s get rid of this tree, I want to change this to that and we can collaborate in real-time, on the same environment from around the world. I think that type of use case for us as filmmakers is going to get even easier. That is, as those tools get easier and less cumbersome, we have either glasses or whatever, and however crazy that technology gets on the consumer side, we filmmakers and entertainment professionals are just going to look for ways to say “Can this make my job easier? Can this takeaway work that would normally take me a whole day to do? Or in a scenario where I have to wait for a call with three people and we could just collaborate in real-time with some of those tools, that to me gets super exciting and there are different ways that we can utilize those.

Philip: Interesting! I come from an architectural engineering background and there are millions of technologies that I look forward to playing with, so I keep thinking oh man, I could use this to build our retirement house and I’m going to construct it in an Unreal environment so I can show my wife what it’s going to look like. Addy, as the unofficial evangelist for Unreal as specified in your bio, do you see the adoption -I would assume with Apple getting behind it if it does come to fruition- of those XR, VR, and AR headsets pushing unreal to the next level? I think they just had a recent release that actually had some additional capabilities. Do you think that’ll keep driving that product?

Addy: Yeah. Absolutely. I think the headset is a piece of hardware and you’re going to need content to then push that hardware. Where is all that content going to come from? That’s going to come from real-time game engines. So, Unreal is still a very small community of creators and artists and I think something like that will help push that community to a bigger scale so we’re going to see more and more creatives and people that can enable the visualization into fruition.

Philip: Maybe you can clarify something for me on the unreal side and maybe for the audience in general. Are things constructed in Unreal from scratch or do you bring in models? Does somebody have to construct a tree to go into the environment? How does Unreal interact with say the other 3D applications that are out there?

Addy: Yeah. Both. For the highest end of applications, for BF effects, and for high-end feature films, I would say nothing in Unreal is built in Unreal. It’s all imported but for a VR application or a consumer application, you can certainly grab a lot of the assets from the epic games marketplace from Quixel, megascans, or even turbo squid. So there are online app marketplaces out there that you can just buy and then drop into your Unreal scene.

Philip: We have a question for Amar Hina who did a big sports project in Saudi Arabia in the United Arab Emirates. I wonder if you could talk a little bit about your XR/VR experience.

Amar Hina: We did a couple of projects with the XR/VR but frankly, connected to sports, I don’t think so. I decided to join because you were discussing a couple of points regarding the hardware part. I don’t think this is something we’d work with in the world of sports but it certainly is useful in the broadcasting and production field. I think the future may be more for virtual elements, virtual productions mixed with VR /XR stuff but mostly augmented reality elements. I believe the integration between the data stats and the TV output might be something interesting. I believe a couple of other areas that should be concentrated on in the future are things like special cameras. There are a couple of companies using new elements like a mix between AR and XR with special cameras, I believe this is something nice to see. It gives a better flavor to the output. Sometimes, we did a couple of projects with a couple of companies mixing the augmented reality elements with special cameras like drones, Sky cams, and some other moving cameras and it went very well. I believe it’ll be interesting to see more of it.

Philip: Excellent! I appreciate the input. Addy, when HDR first came out, everybody overused it, and the same with drones. Do you see that potential in it? I haven’t seen it personally yet, maybe in some lower-budget films. But do you think that’s a potential where people will push it beyond what it’s really designed to do or to help with?

Addy: I mean the technology that we’re talking about large LED volumes aren’t just expensive so we probably have gone past that phase already in the last two years. And now, the business cases that make sense they’re still here and are making incredible content. The ones that just came and went are behind us.

Philip: Okay. Fantastic! Every once in a while I’ll see a film and I’m like “Is that on a set? Is that real? Did they push it too far?” But I’m glad to hear that maybe we’ve gone past that quickly. That’s definitely a good thing.

Philip: Justin, in your world, you’re the one who pushes it too far, obviously not you personally You’re Gonna Keep It Real There as they say. One of the things I know when looking at some spaces early on was the whole… I have the sky, I have the floor. Is it really trying to do that fully immersive environment with everything? or does it make sense to have the practicals in there to have some set pieces so that you have the air of reality? Do you find people doing more than just trying to do it all in the virtual world?

Justin: Yeah. I think you hit it right on the head. The best use case is right out of the gate and it makes sense if you have a TV show with a guy in a full chrome suit so you have reflections all the way and there are definitely some benefits there. But I think when everybody was like “Well it’s probably not best for every scenario that we only light with LED panels so, we want to use traditional lights, we want to shape that light as a cinematographer.” I’m like I want to create an image that points the audience to what I want them to see or to hear the story that we’re trying to tell and so, it’s about shaping that image and finding the best way to do it and saying that “Where we’re at right now, led panels don’t reproduce great light on skin tones and so we need to have great lights to help skin tones and the LED panels do what they do really well until those things kind of slowly over time become the same thing. So there’s definitely this middle ground in this balancing act to use tools to solve the problems that they can solve really well. If we can do all those things and bring them together to tell a good story by using the tools for what they should be used for and not just settle for the tool because it can do a lot of things. Most times, it just ends up being more expensive and more stressful.

Philip: We are getting close to the top of the hour and I wanted to go around the table real quick. We’ll start with Addie, what are you guys working on? What do you think is the next big thing and what should we be looking out for in disguise?

Addy: For us, it’s all about building entire ecosystems, so the next big thing for us is an overarching cloud infrastructure that manages all of your content: 3D, 2D version controls it and so asset management is easier overall.

Philip: Fantastic! And Anatoly, what’s big on your horizon? What’s next for you guys?

Anatoly: We’re thinking about a horizon of 10 to 15 years. We’re coming to a completely new world where people are living in an entertainment economy and the most valuable currency is time we’re creating a time-based tokenizing system to create a reputation ratio between the duration and the actual duration of watching the contents. We do believe that content created by unprofessional users, let’s say user-generated content is the next big thing because of the phenomena where two bloggers on their seats with an iPhone can create way more attention than the regular OB van team with their broadcast network. We see that ads and budgets are migrating to personalities and it’s all about next about the metaverse and that’s why we focused on our system of telepresence which allows you to experience VR in a zero-latency way which is actually teleportation. This might lead to small ideas about digitizing the real world in cinematic quality in real-time. We’re working on that and on tokenizing time.

Philip: Fantastic! And I see that Muhammad has joined us. Quickly, Mohammad, can you talk about some of the things you’ve done and then what’s big in your world? What do you see as the next big thing that’s going to be out there for the XR/VR world?

Mohammad Ali Abbaspour: Thank you so much. I’m just enjoying hearing the opinion of our friends and experts. So sorry, I was in another meeting so I joined very late. We are engaged in XR and AR as you may know, and we are involved in immersive experience and also virtual overlay for the industry. Our main target right now is personalizing the feeds and the content for the fans in real-time and I believe with the power of AR/XR and obviously AI and machine learning, it is something that we can achieve. In actuality, it has been achieved. It just needs more focus on the commercial part to be available for the fans in the industry and for the right holders. So, yeah. I enjoy hearing what the others are saying.

Philip: Fantastic! Amar, over to you. What’s big in your horizons and what do you think is coming down the pipe? What are you working on?

Amar Hina: Honestly, it’s a mix of things because currently, I’m focusing more on the sports side and it is limited with the time of the game itself so it’s a little bit hard sometimes with the main sports. I’m not going to mention any sports in specific but in some sports, it’s so hard to keep the output entertaining…

Philip: Cricket! It’s Cricket. It lasts for like five days.

Amar: Cricket is the easiest. But yeah. It’s a pool of innovation because of the length of time. For football, because of the lack of time, in 90 minutes you have to make all the entertainment count by adding extra elements all the time while utilizing the data and stats and at the same time, building more commercial assists as Mohammad just mentioned. The commercial assets within the game itself are something important to me to maintain the business all the time and I believe as our colleague mentioned the fans are the future. But how to involve them without affecting the sport is something that might be challenging and also there’s the issue of making it more accessible. I believe having more output connected to mobile phones may be the new platforms that might show up anytime and we should be ready to have special output for it.

Philip: Justin, what do you have going on? What should people be looking for and what big tech in the industry do you think is coming down the pipe over the next 12 to 18 months?

Justin: We’re hitting our stride with a lot of these tools and we’re starting to see not just like films and TV shows that are utilizing these tools to make cool images and stuff but we’re able to be more efficient and tell more stories from more perspectives because the world’s still very content hungry, everybody wants the new thing to watch. We’re excited to be able to provide that but also have the toolset to do it in a humane way for ourselves to where we’re able to keep it fun and exciting and keep the joy of storytelling in our hearts to where we can make things that matter. But it’s almost like barriers are getting out of the way.

Philip: Fantastic! Well, first, let me thank everybody for being a part of this second Visionaries. We’re really moving this forward and I can’t thank you enough for the insights. I’m sure the audience learned a lot. I learned a lot personally and I’d like to thank everybody again for taking the time out on a Wednesday I look forward to everybody joining us next month where we’re going to be talking about AI. Thanks again and have a good evening.