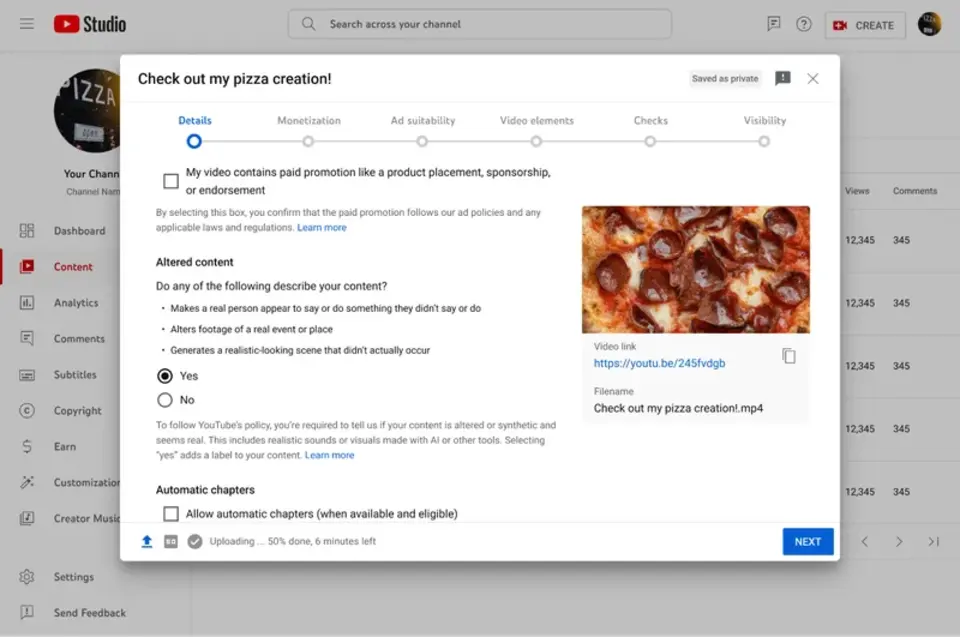

YouTube is set to implement a new policy in its Creator Studio, mandating that video creators inform their audience when they use altered or synthetic media to produce content that mimics reality.

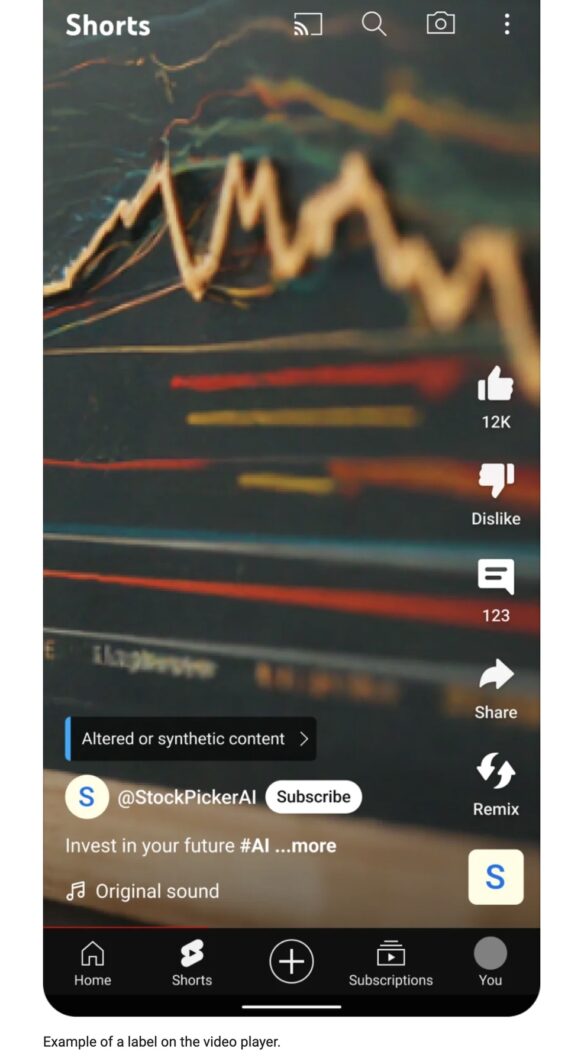

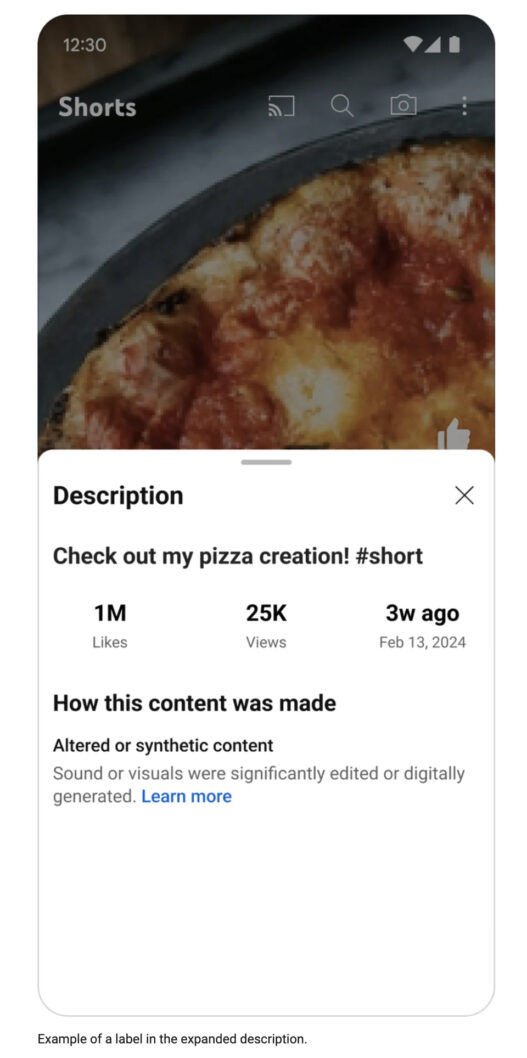

This includes any content that could be mistaken for real individuals, locations, or occurrences. Creators will be required to add disclosures either in the video description or prominently on the video player itself. However, this rule excludes content that is evidently artificial, such as animation, special effects, or videos where generative AI has been used for behind-the-scenes production support.

Objective of the Disclosure Policy

The primary goal behind introducing this policy is to enhance viewer transparency and foster trust between content creators and their audience. YouTube aims to make it clear to viewers when they are watching content that has been digitally manipulated or generated, especially when such content could potentially be confused with reality.

Content Requiring Disclosure

Specific instances requiring disclosure include:

- Videos that use the digital likeness of people, for instance, swapping faces or using AI to mimic someone’s voice.

- Alterations to real-life footage, like editing videos to make it appear as if an actual building is on fire, or changing cityscapes to look different from their real-life appearance.

- Creation of realistic, yet fictional scenarios, such as depicting a tornado heading towards a real town.

Enforcement and Penalties for Non-Compliance

YouTube plans to begin rolling out these disclosure labels across its platforms, starting with the mobile app, followed by desktop and TV versions. For videos touching on sensitive subjects like health, news, elections, or finance, the labels will be more visible. Should creators fail to comply with this disclosure requirement, YouTube has warned that it may independently add labels to videos, especially if the content could mislead or confuse viewers.

Challenges and Criticisms

The effectiveness of YouTube’s strategy in combating misinformation through labeling rather than removing false content has been met with skepticism. Critics argue that simply labeling misleading content may not be sufficient to curb the spread of misinformation on large digital platforms, drawing parallels to similar practices on social media platforms like X, where disclaimers are placed under posts containing false information.

News source: https://blog.youtube/news-and-events/disclosing-ai-generated-content/